Using Azure Cognitive Services to Caption Video

In this post we will explore the use of Microsoft Azure Cognitive Services to caption video images caught from a webcam in real time.

We will start by importing some libraries:

datetimefor displaying the current time on our labelsrequeststo make requests to the Cognitive Services APIcv2(OpenCV) for streaming images from our webcam

In [1]:

import datetime

import requests

import cv2 # opencv

You will need to sign up for an Azure Cognitive Services subscription API key.

In [2]:

subscription_key = # your subscription key here

vision_base_url = "https://westeurope.api.cognitive.microsoft.com/vision/v1.0/"

vision_analyze_url = vision_base_url + "analyze"

Now we define a function to make a request to Azure Cognitive Services and return a caption with the time of the request that we can use to caption our image.

In [3]:

def get_image_caption(image):

img = cv2.imencode('.jpg', image)[1].tostring()

# Request headers

headers = {'Content-Type': 'application/octet-stream',

'Ocp-Apim-Subscription-Key': subscription_key }

# Request params

params = {'visualFeatures': 'Categories,Description,Color'}

# Make a request to the Azure Cognitive Services Vision Analysis API

response = requests.post(vision_analyze_url,

params=params,

headers=headers,

data=img)

# Extract the json from the response

analysis = response.json()

# Extract the captions from the output json.

image_caption = analysis["description"]["captions"][0]["text"].capitalize()

time_now = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

# Caption with current time

return (time_now + ": " + image_caption)

We set a count and each time the count reaches 50, a request is sent to the Cognitive Services API. The caption from the API is overlayed on the webcam image.

Press the ‘q’ button to quit at any point.

In [4]:

stream = cv2.VideoCapture(0)

key = None

count = 0

overlay_text = ""

while True:

if count % 3 != 0:

# Read frames from live web cam stream

(grabbed, frame) = stream.read()

# Make copies of the frame for transparency processing

output = frame.copy()

# Get caption to overlay

if count % 50 == 0:

overlay_text = get_image_caption(output)

# Overlay caption on image

cv2.putText(output, overlay_text, (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 0), 2)

# Show the frame

cv2.imshow("Image", output)

key = cv2.waitKey(1) & 0xFF

count +=1

# Press q to break out of the loop

if key == ord("q"):

break

# cleanup

stream.release()

cv2.waitKey(1)

cv2.destroyAllWindows()

cv2.waitKey(1)

Out[4]:

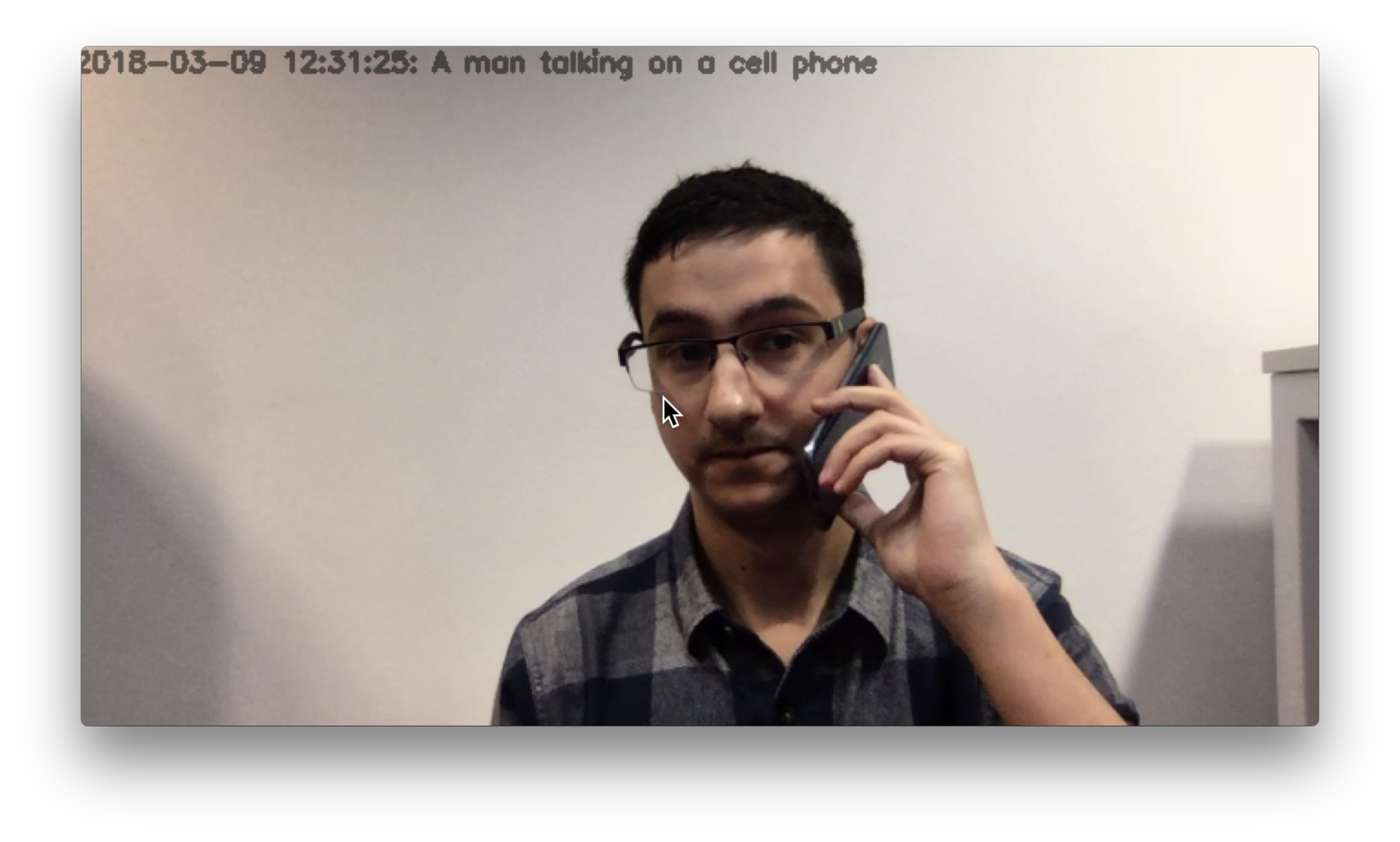

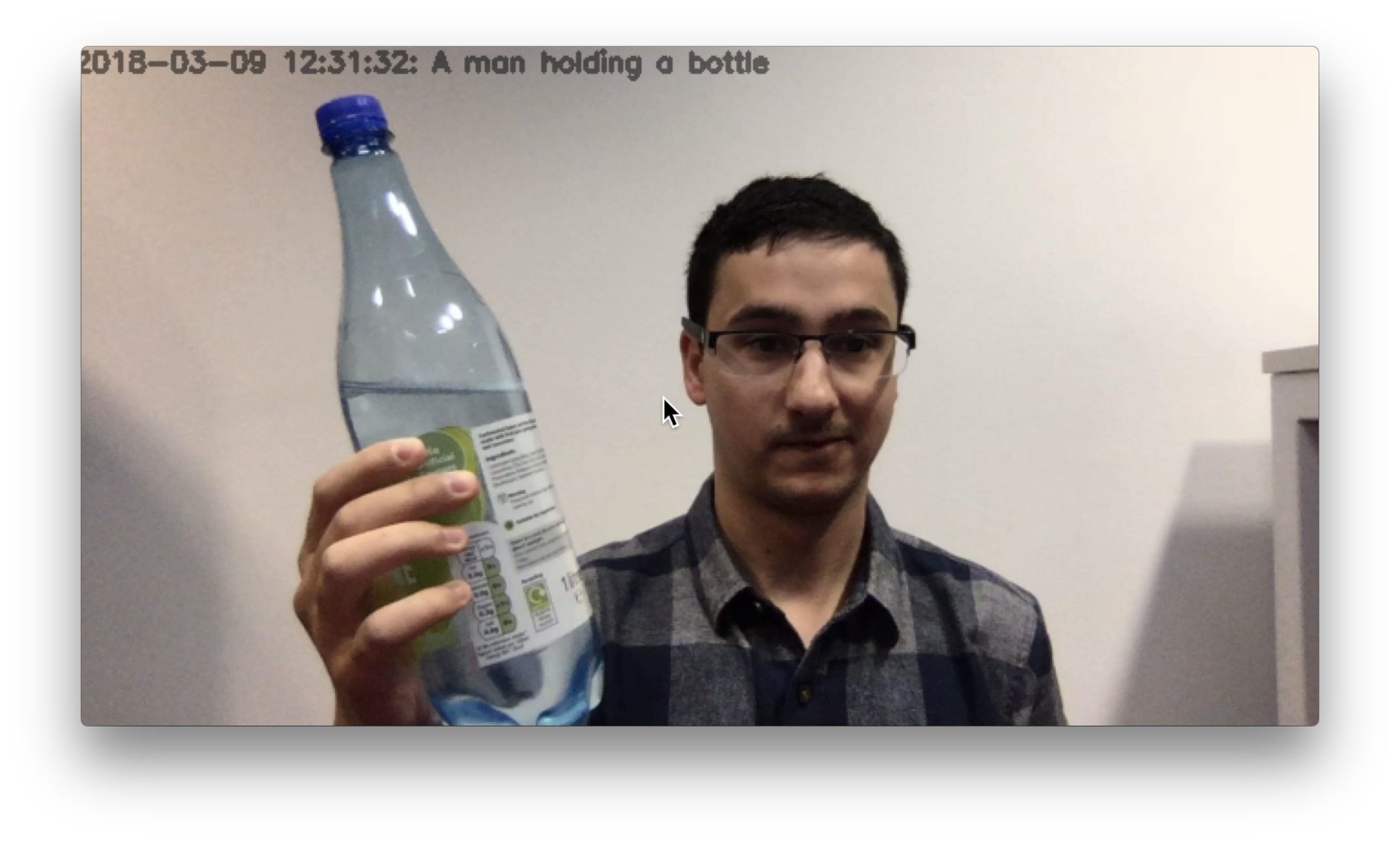

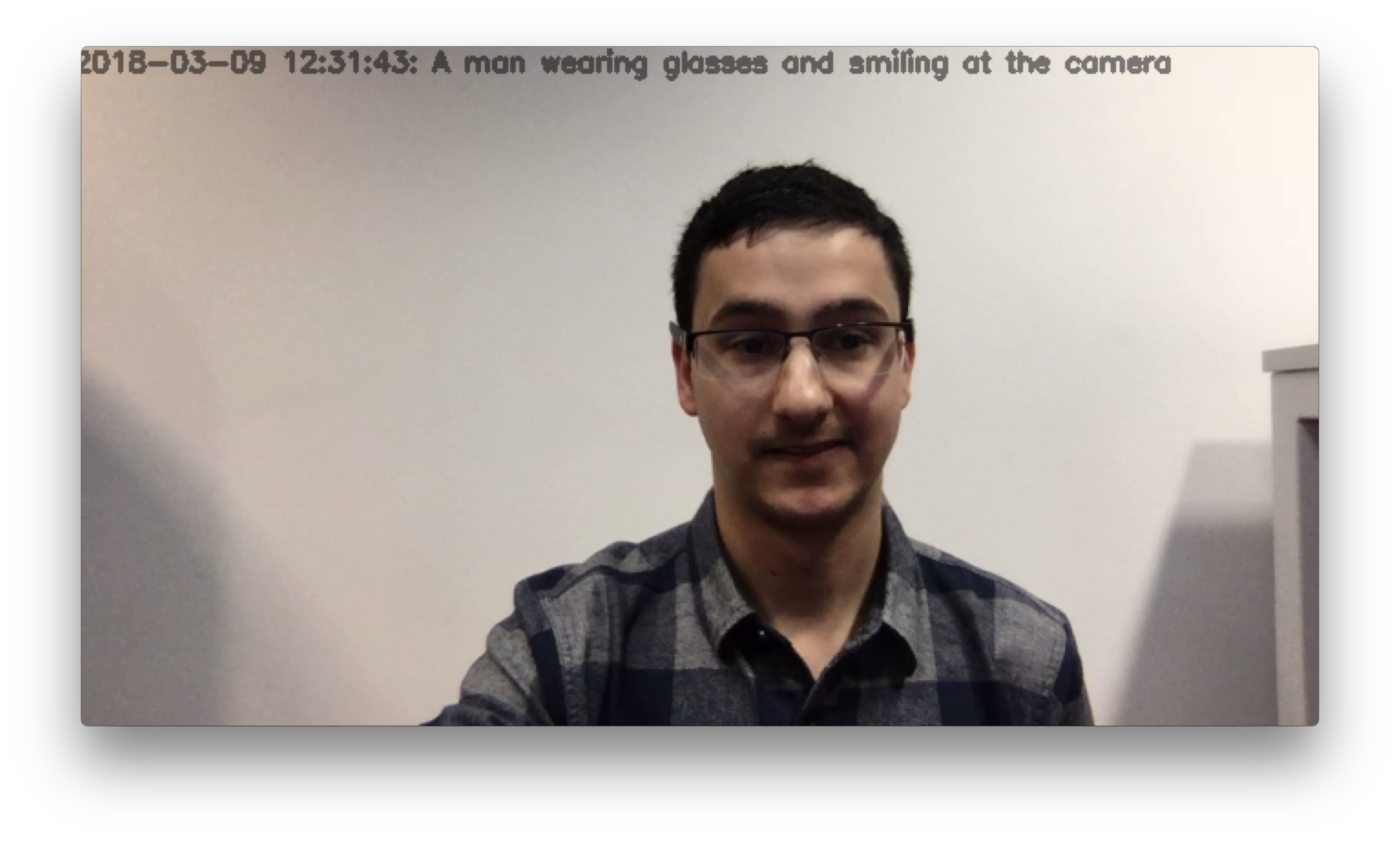

Below are some webcam screenshots with an idea of the kind of output you will see.